Google’s new Gemma 3 AI models are fast, frugal, and ready for phones

Google’s AI efforts are synonymous with Gemini, which has now become an integral element of its most popular products across the Worksuite software and hardware, as well. However, the company has also released multiple open-source AI models under the Gemma label for over a year now.

Today, Google revealed its third generation open-source AI models with some impressive claims in tow. The Gemma 3 models come in four variants — 1 billion, 4 billion, 12 billion, and 27 billion parameters — and are designed to run on devices ranging from smartphones to beefy workstations.

Ready for mobile devices

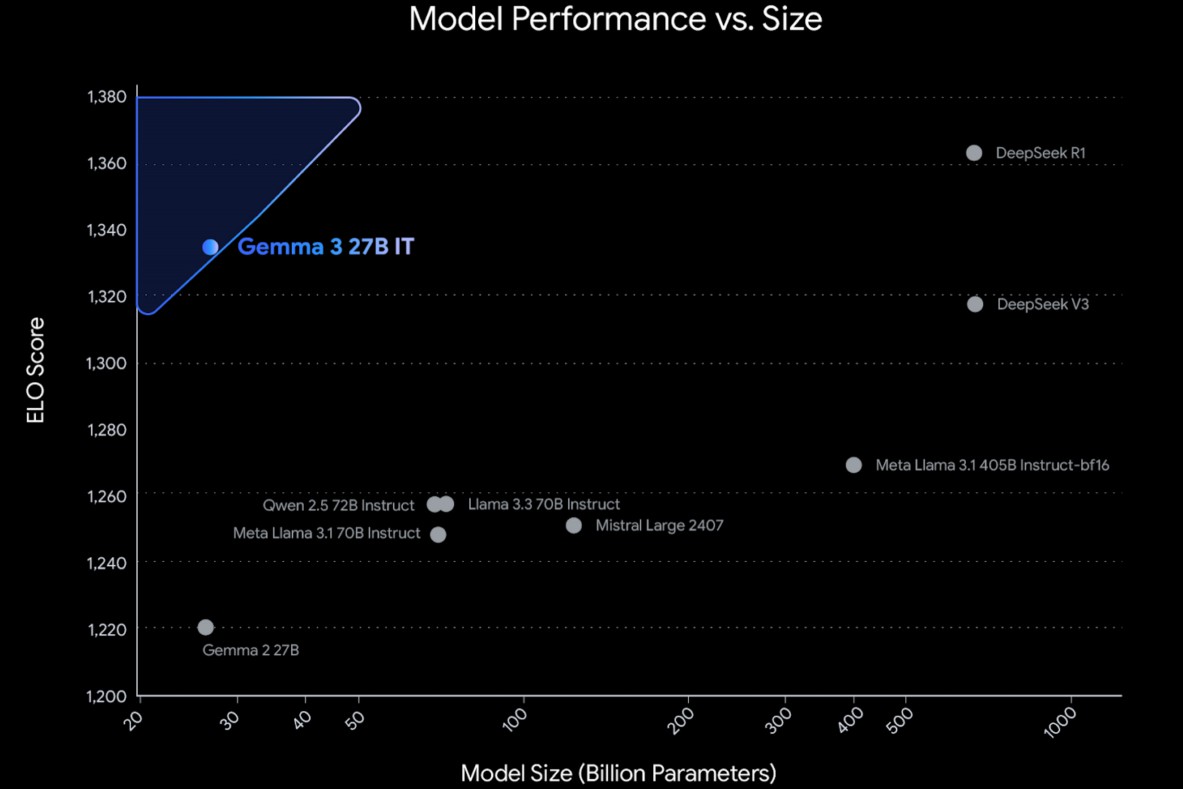

Google says Gemma 3 is the world’s best single-accelerator model, which means it can run on a single GPU or TPU instead of requiring a whole cluster. Theoretically, that means a Gemma 3 AI model can natively run on the Pixel smartphone’s Tensor Processing Core (TPU) unit, just the way it runs the Gemini Nano model locally on phones.

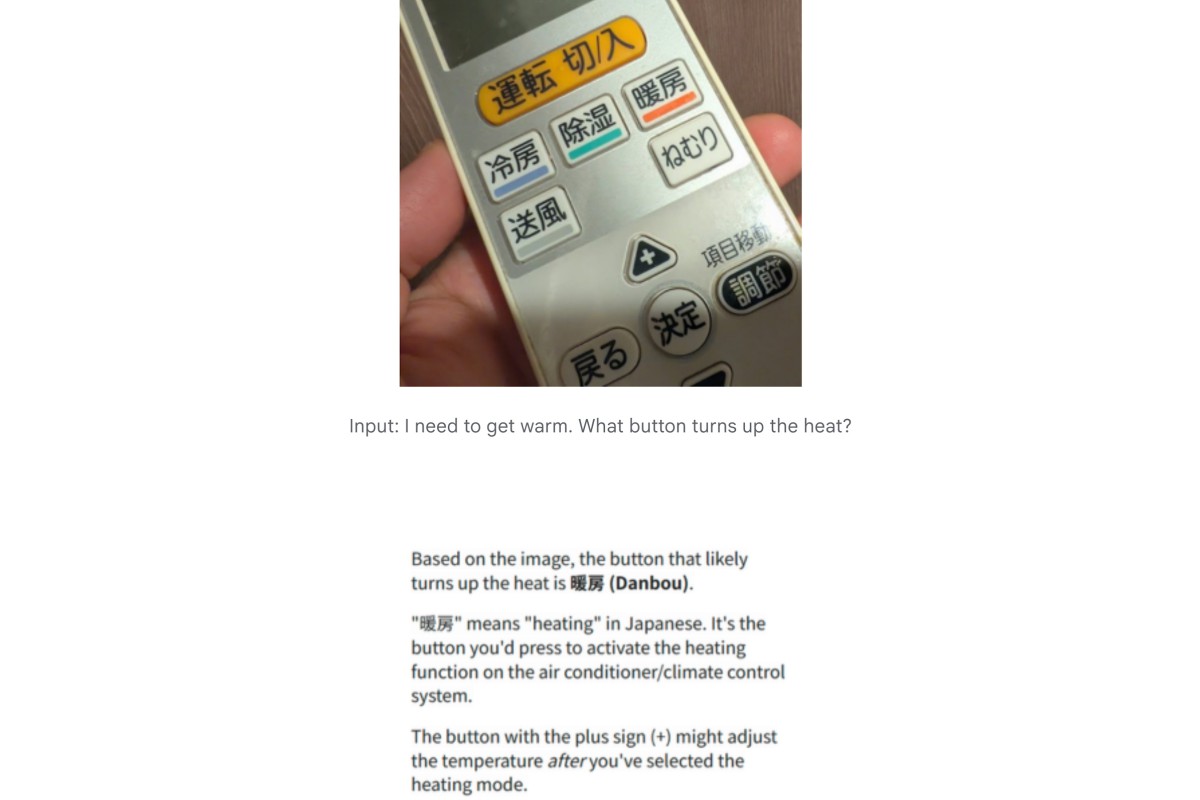

The biggest advantage of Gemma 3 over the Gemini family of AI models is that since it’s open-source, developers can package and ship it according to their unique requirements inside mobile apps and desktop software. Another crucial benefit is that Gemma supports over 140 languages, with 35 of them coming as part of a pre-trained package.

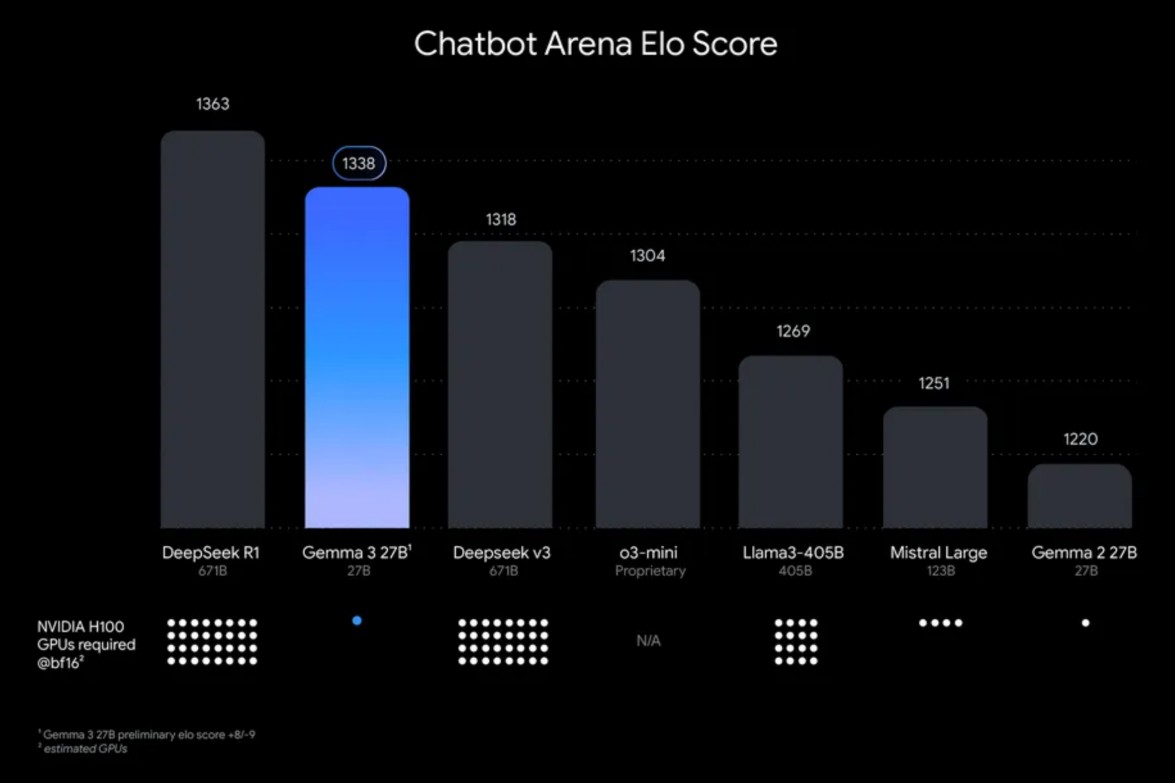

And just like the latest Gemini 2.0 series models, Gemma 3 is also capable of understanding text, images, and videos. In a nutshell, it is multi-multimdal. On the performance side, Gemma 3 is claimed to surpass other popular open-source AI models such as DeepSeek V3, the reasoning-ready OpenAI o3-mini, and Meta’s Llama-405B variant.

Versatile, and ready to deploy

Taking about input range, Gemma 3 offers a context window worth 128,000 tokens. That’s enough to cover a full 200-page book pushed as an input. For comparison, the context window for Google’s Gemini 2.0 Flash Lite model stands at a million tokens. In the context of AI models, an average English language word is roughly equivalent to 1.3 tokens.

Gemma 3 also supports function calling and structured output, which essentially means it can interact with external datasets and perform tasks like an automated agent. The nearest analogy would be Gemini, and how it can get work done across different platforms such as Gmail or Docs seamlessly.

The latest open-source AI models from Google can either be deployed locally, or through the company’s cloud-based platforms such as the Vertex AI suite. Gemma 3 AI models are now available via the Google AI Studio, as well as third-party repositories such as Hugging Face, Ollama, and Kaggle.

Gemma 3 is part of an industry trend where companies are working on Large Language Models (Gemini, in Google’s case) and simultaneously pushing out small language models (SLMs), as well. Microsoft also follows a similar strategy with its open-source Phi series of small language models.

Small language models such as Gemma and Phi are extremely resource efficient, which makes them an ideal choice for running on devices such as smartphones. Moroever, as they offer a lower latency, they are particularly well-suited for mobile applications.

Comments on "Google’s new Gemma 3 AI models are fast, frugal, and ready for phones" :