RECOMMENDED NEWS

This quirky AI-powered camera prints poems, not photos

The Poetry Camera is an ingenious device that doesn’t take photos but instead makes poems.The clev...

Read More →

YouTube’s AI Overviews want to make search results smarter

YouTube is experimenting with a new AI feature that could change how people find videos. Here’s th...

Read More →

Microsoft Copilot Vision turns your phone camera into an interactive visual search tool

Late last year, Microsoft introduced a new AI feature called Copilot Vision for the web, and now it�...

Read More →Microsoft considers developing AI models to better control Copilot features

Microsoft may be on its way to developing AI models independent of its partnership with OpenAI. Over...

Read More →

ChatGPT’s Advanced Voice Mode now has a ‘better personality’

If you find that ChatGPT’s Advanced Voice Mode is a little too keen to jump in when you’re engag...

Read More →

The latest Windows 11 build has a surprising bug — it gets rid of Copilot

Microsoft has updated the support page for the Windows 11 build it released last week to reveal a ra...

Read More →

Chromebooks are about to get a lot smarter, and more accessible

Google recently announced that Gemini will soon replace Google Assistant everywhere, from your phone...

Read More →

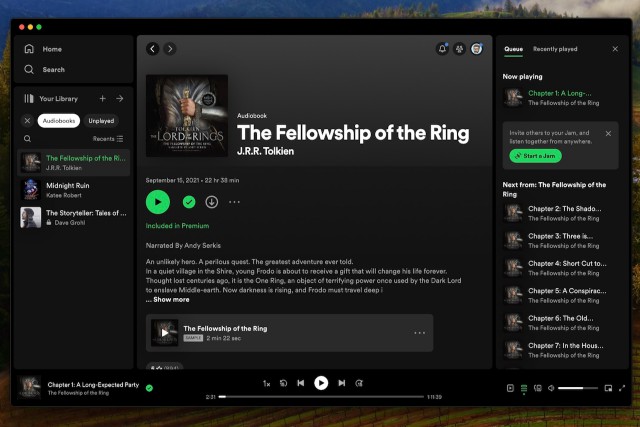

Spotify now offers its listeners AI-narrated audiobooks

Derek Malcolm / Digital TrendsIn a move expected to dramatically increase the quantity of available ...

Read More →

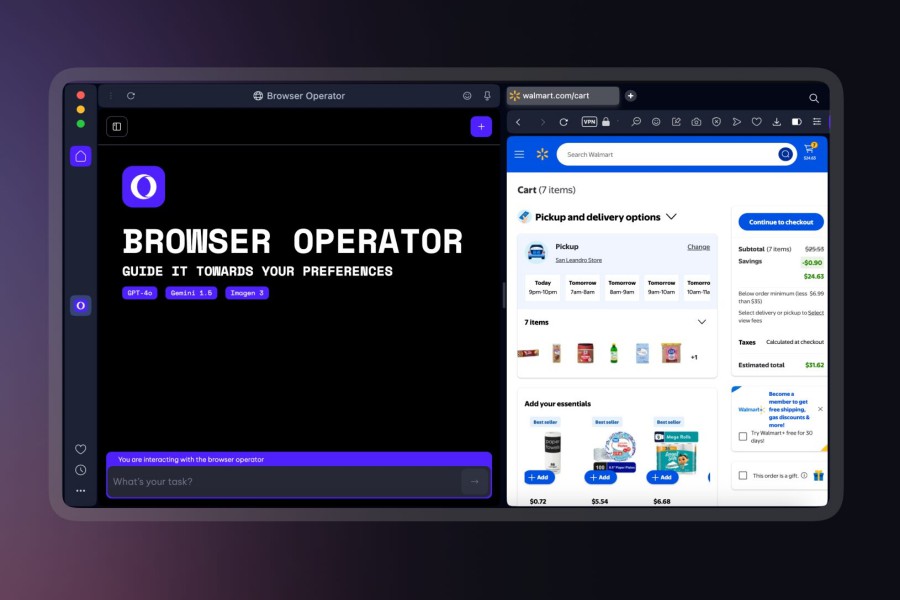

Opera’s Operator will save you the clicks and browse the web for you

Mobile World Congress Read our complete coverage of Mobile Worl...

Read More →

Comments on "OpenAI showing a ‘very dangerous mentality’ regarding safety, expert warns" :