RECOMMENDED NEWS

WeTransfer backlash highlights need for smarter AI practices

A recent update to WeTransfer’s terms of service caused consternation after some of its customers ...

Read More →Microsoft considers developing AI models to better control Copilot features

Microsoft may be on its way to developing AI models independent of its partnership with OpenAI. Over...

Read More →

Samsung might put AI smart glasses on the shelves this year

Samsung’s Project Moohan XR headset has grabbed all the spotlights in the past few months, and rig...

Read More →

OpenAI halts free GPT-4o image generation after Studio Ghibli viral trend

After only one day, OpenAI has put a halt on the free version of its in-app image generator, powered...

Read More →

‘Princess Mononoke’s U.S. distributer touts box office success ‘in a time when technology tries to replicate humanity’

More than 25 years after its original release, Hayao Miyazaki’s action epic Princess Mononoke is...

Read More →

Amazon’s AI shopper makes sure you don’t leave without spending

The future of online shopping on Amazon is going to be heavily dependent on AI. Early in 2025, the c...

Read More →

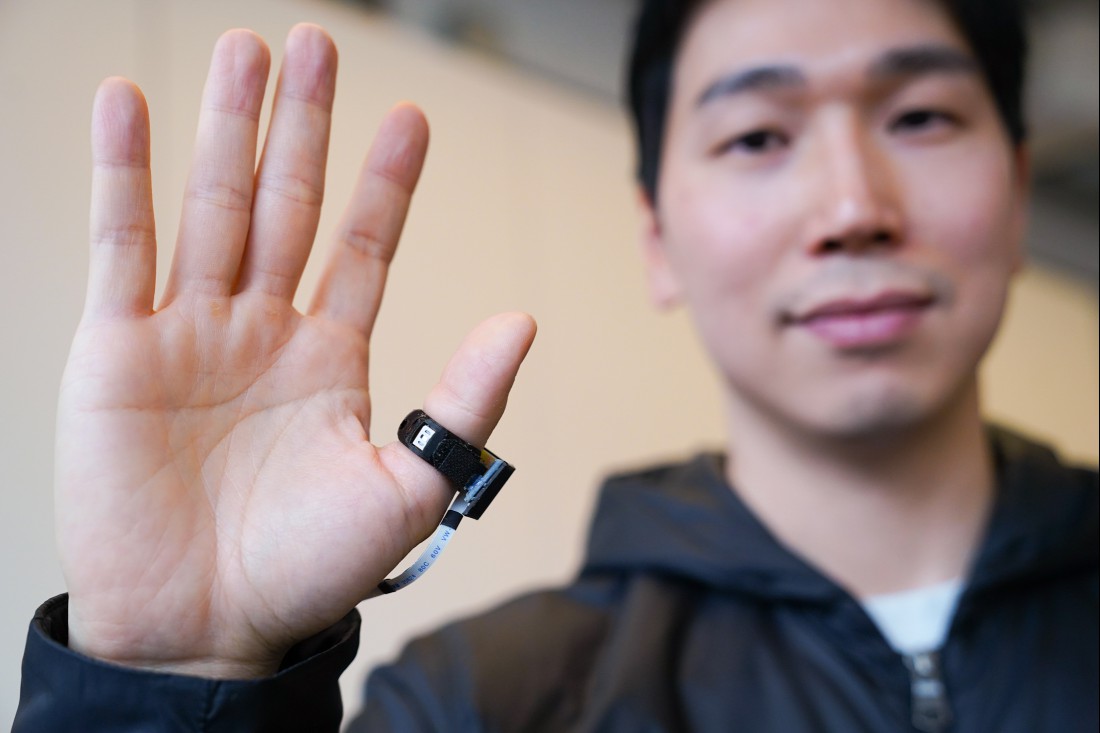

Low-cost smart ring shows the future of sign language input on phones

Tech for Change This story is part of Tech for Change: an ongoi...

Read More →

Google’s AI can now tell you what to do with your life

Andrew Tarantola / Google LabsGot a degree and no idea what to do with it? Google’s newest AI feat...

Read More →

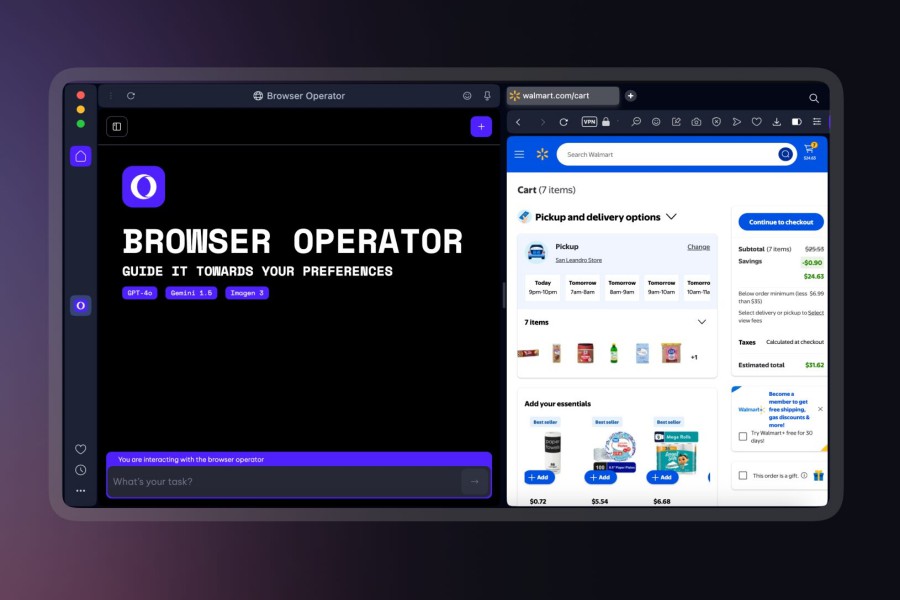

Opera’s Operator will save you the clicks and browse the web for you

Mobile World Congress Read our complete coverage of Mobile Worl...

Read More →

Comments on "WeTransfer backlash highlights need for smarter AI practices" :